2025 Interconnects Year In Review

페이지 정보

작성자 Bud 작성일 25-02-17 05:16 조회 2 댓글 0본문

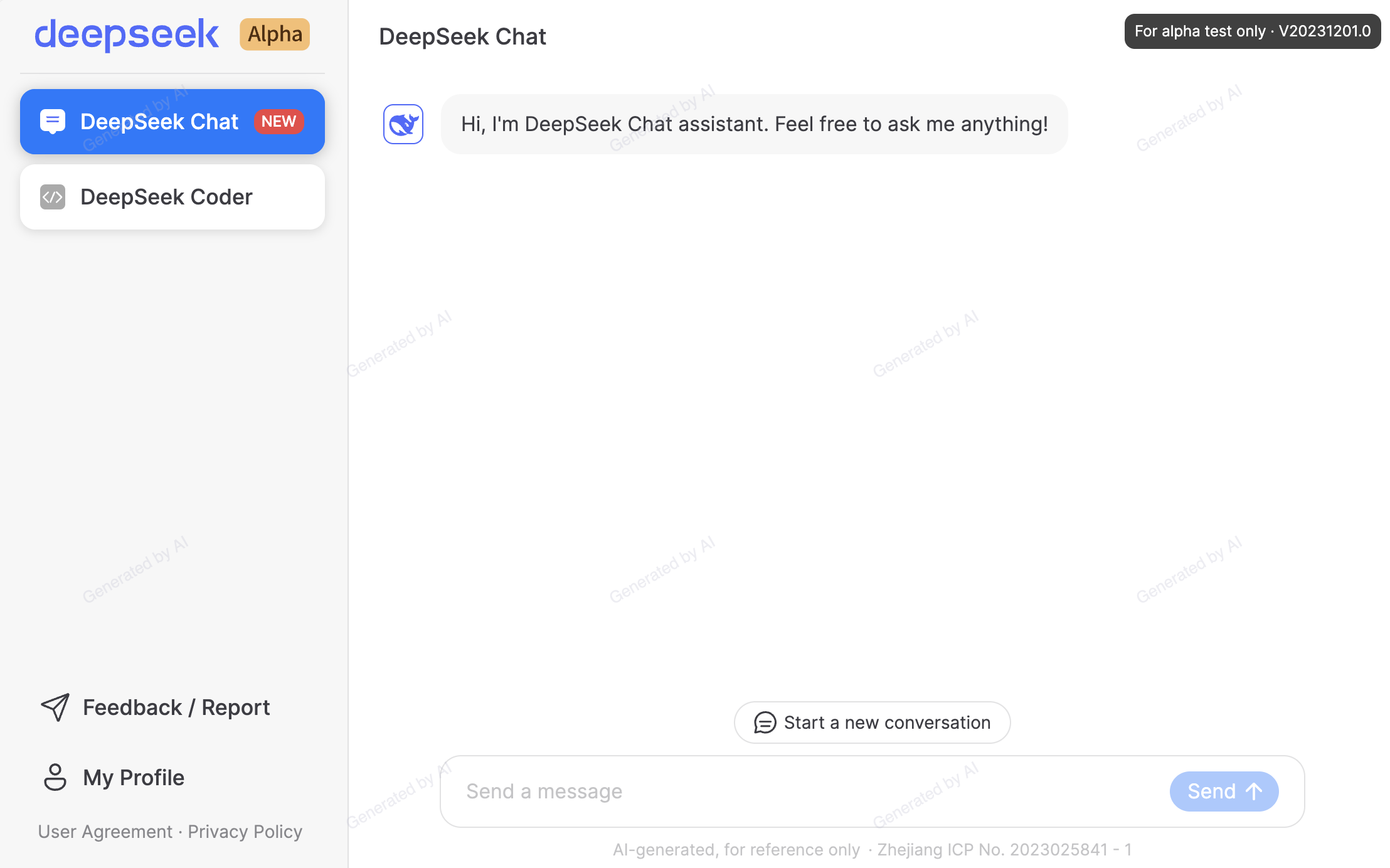

Deepseek can perceive and reply to human language identical to an individual would. Forbes reported that NVIDIA set information and noticed a $589 billion loss because of this, while different main stocks like Broadcom (another AI chip company) also suffered massive losses. Ethical issues and limitations: While DeepSeek-V2.5 represents a significant technological advancement, it additionally raises necessary ethical questions. Despite its reputation with worldwide customers, the app appears to censor answers to delicate questions about China and its authorities. It beat ChatGPT, which had been the most popular free Deep seek AI app for the previous few years. It’s worth a read for a few distinct takes, a few of which I agree with. Read the essay here: Machinic Desire (PDF). "Despite their obvious simplicity, these issues typically contain complex resolution methods, making them excellent candidates for constructing proof information to enhance theorem-proving capabilities in Large Language Models (LLMs)," the researchers write. "Our instant objective is to develop LLMs with sturdy theorem-proving capabilities, aiding human mathematicians in formal verification tasks, such because the recent venture of verifying Fermat’s Last Theorem in Lean," Xin mentioned. "A major concern for the future of LLMs is that human-generated data might not meet the growing demand for top-high quality knowledge," Xin stated.

Deepseek can perceive and reply to human language identical to an individual would. Forbes reported that NVIDIA set information and noticed a $589 billion loss because of this, while different main stocks like Broadcom (another AI chip company) also suffered massive losses. Ethical issues and limitations: While DeepSeek-V2.5 represents a significant technological advancement, it additionally raises necessary ethical questions. Despite its reputation with worldwide customers, the app appears to censor answers to delicate questions about China and its authorities. It beat ChatGPT, which had been the most popular free Deep seek AI app for the previous few years. It’s worth a read for a few distinct takes, a few of which I agree with. Read the essay here: Machinic Desire (PDF). "Despite their obvious simplicity, these issues typically contain complex resolution methods, making them excellent candidates for constructing proof information to enhance theorem-proving capabilities in Large Language Models (LLMs)," the researchers write. "Our instant objective is to develop LLMs with sturdy theorem-proving capabilities, aiding human mathematicians in formal verification tasks, such because the recent venture of verifying Fermat’s Last Theorem in Lean," Xin mentioned. "A major concern for the future of LLMs is that human-generated data might not meet the growing demand for top-high quality knowledge," Xin stated.

Recently, Alibaba, the chinese language tech giant also unveiled its personal LLM called Qwen-72B, which has been trained on high-high quality data consisting of 3T tokens and likewise an expanded context window size of 32K. Not just that, the corporate also added a smaller language model, Qwen-1.8B, touting it as a reward to the research community. "Our work demonstrates that, with rigorous evaluation mechanisms like Lean, it is feasible to synthesize giant-scale, excessive-high quality knowledge. "We imagine formal theorem proving languages like Lean, which provide rigorous verification, symbolize the way forward for arithmetic," Xin mentioned, pointing to the rising trend within the mathematical group to make use of theorem provers to verify advanced proofs. Results reveal DeepSeek LLM’s supremacy over LLaMA-2, GPT-3.5, and Claude-2 in various metrics, showcasing its prowess in English and Chinese languages. Available in each English and Chinese languages, the LLM aims to foster analysis and innovation. The open-supply nature of DeepSeek-V2.5 may speed up innovation and democratize entry to superior AI applied sciences. To run locally, DeepSeek-V2.5 requires BF16 format setup with 80GB GPUs, with optimal performance achieved utilizing eight GPUs. At Middleware, we're dedicated to enhancing developer productivity our open-supply DORA metrics product helps engineering teams improve effectivity by offering insights into PR critiques, identifying bottlenecks, and suggesting methods to boost workforce performance over four essential metrics.

Recently, Alibaba, the chinese language tech giant also unveiled its personal LLM called Qwen-72B, which has been trained on high-high quality data consisting of 3T tokens and likewise an expanded context window size of 32K. Not just that, the corporate also added a smaller language model, Qwen-1.8B, touting it as a reward to the research community. "Our work demonstrates that, with rigorous evaluation mechanisms like Lean, it is feasible to synthesize giant-scale, excessive-high quality knowledge. "We imagine formal theorem proving languages like Lean, which provide rigorous verification, symbolize the way forward for arithmetic," Xin mentioned, pointing to the rising trend within the mathematical group to make use of theorem provers to verify advanced proofs. Results reveal DeepSeek LLM’s supremacy over LLaMA-2, GPT-3.5, and Claude-2 in various metrics, showcasing its prowess in English and Chinese languages. Available in each English and Chinese languages, the LLM aims to foster analysis and innovation. The open-supply nature of DeepSeek-V2.5 may speed up innovation and democratize entry to superior AI applied sciences. To run locally, DeepSeek-V2.5 requires BF16 format setup with 80GB GPUs, with optimal performance achieved utilizing eight GPUs. At Middleware, we're dedicated to enhancing developer productivity our open-supply DORA metrics product helps engineering teams improve effectivity by offering insights into PR critiques, identifying bottlenecks, and suggesting methods to boost workforce performance over four essential metrics.

This offers full management over the AI models and ensures full privacy. This guide is your shortcut to unlocking DeepSeek-R1’s full potential. Future outlook and potential impression: DeepSeek-V2.5’s launch might catalyze further developments in the open-supply AI group and influence the broader AI industry. Expert recognition and reward: The brand new model has obtained vital acclaim from industry professionals and AI observers for its efficiency and capabilities. The hardware requirements for optimum performance might restrict accessibility for some users or organizations. Accessibility and licensing: DeepSeek-V2.5 is designed to be broadly accessible whereas sustaining certain ethical requirements. AlphaGeometry also makes use of a geometry-particular language, while DeepSeek-Prover leverages Lean’s complete library, which covers various areas of arithmetic. On the extra challenging FIMO benchmark, DeepSeek-Prover solved four out of 148 issues with 100 samples, whereas GPT-four solved none. The researchers plan to increase DeepSeek-Prover’s knowledge to extra superior mathematical fields. "The research offered in this paper has the potential to considerably advance automated theorem proving by leveraging massive-scale synthetic proof knowledge generated from informal mathematical issues," the researchers write.

Dependence on Proof Assistant: The system's efficiency is heavily dependent on the capabilities of the proof assistant it's integrated with. When exploring efficiency you want to push it, in fact. The analysis extends to by no means-before-seen exams, together with the Hungarian National Highschool Exam, the place DeepSeek LLM 67B Chat exhibits outstanding efficiency. DeepSeek LLM 7B/67B models, together with base and chat variations, are launched to the general public on GitHub, Hugging Face and also AWS S3. As with all powerful language models, concerns about misinformation, bias, and privacy stay relevant. Implications for the AI panorama: Free Deepseek Online chat-V2.5’s release signifies a notable advancement in open-source language fashions, probably reshaping the competitive dynamics in the sphere. Its chat version also outperforms other open-supply fashions and achieves performance comparable to main closed-supply models, including GPT-4o and Claude-3.5-Sonnet, on a collection of commonplace and open-ended benchmarks. In-depth evaluations have been conducted on the bottom and chat models, comparing them to existing benchmarks.

- 이전글 The 10 Scariest Things About Electric Fireplace Suite UK

- 다음글 Why You Must Experience Buy Telc Certificate At The Very Least Once In Your Lifetime

댓글목록 0

등록된 댓글이 없습니다.